April 21, 2020

After a busy 2019, legal developments related to artificial intelligence and automated systems (“AI”) continued apace into the new decade and saw tangible regulatory frameworks begin to take shape on both sides of the Atlantic. Continuing to tread lightly, in January 2020 the U.S. federal government issued “AI principles” to guide agencies in regulating the private sector—a tentative first step towards federal regulatory oversight for AI.[1] The following month, the European Commission (“EC”) published its comprehensive and highly anticipated draft legislative proposal for the regulation of AI in the EU. Now—as lawmakers worldwide focus efforts and resources on the evolving pandemic crisis and the private sector is hamstrung by shelter-in-place orders—the forward momentum has faltered. Nonetheless, we offer this brief overview of the most recent developments in the AI space, and take a deep dive into the fast-evolving status of intellectual property (“IP”) policy on AI.

_______________________

I. U.S. Federal Legislation & Policy

_______________________

I. U.S. FEDERAL LEGISLATION & POLICY

OMB Guidance for Federal Regulatory Agencies

The February 2019 Executive Order “Maintaining American Leadership in Artificial Intelligence” (“EO”) directed the Office of Management and Budget (“OMB”) director, in coordination with the directors of the Office of Science and Technology Police, Domestic Policy Council, and National Economic Council, and in consultation with other relevant agencies and key stakeholders (as determined by the OMB), to issue a memorandum to the heads of all agencies to “inform the development of regulatory and non-regulatory approaches” to AI that “advance American innovation while upholding civil liberties, privacy, and American values” and consider ways to reduce barriers to the use of AI technologies in order to promote their innovative application.[2] The White House Office of Science and Technology Policy further indicated in April 2019 that regulatory authority will be left to agencies to adjust to their sectors, but with high-level guidance from the OMB, as directed by the EO.[3]

In January 2020, the OMB published a draft memorandum featuring 10 “AI Principles” and outlining its proposed approach to regulatory guidance for the private sector which echoes the “light-touch” regulatory approach espoused by the 2019 EO, noting that promoting innovation and growth of AI is a “high priority” and that “fostering innovation and growth through forbearing from new regulations may be appropriate.”[4] As expected, the principles favor flexible regulatory frameworks consistent with the EO[5] that allow for rapid change and updates across sectors, rather than one-size-fits-all regulations, and urge European lawmakers to avoid heavy regulation frameworks. The key takeaway for agencies is to encourage AI and, when it is necessary to regulate, to tread lightly and not overregulate or risk impeding AI development.

The 10 “AI Principles” for U.S. regulatory agencies are:

- Public Trust in AI—In response to concerns about the risks of AI, the memorandum notes that it is “important that the government’s regulatory and non-regulatory approaches to AI promote reliable, robust, and trustworthy AI applications, which will contribute to public trust in AI.”

- Public Participation—An agency should foster public trust by encouraging public participation in its AI regulation; therefore, “[a]gencies should provide ample opportunities for the public to provide information and participate in all stages of the rulemaking process, to the extent feasible .…”

- Scientific Integrity and Information Quality—Agencies should develop regulatory approaches “[c]onsistent with the principles of scientific integrity” to foster public trust. “Best practices include transparently articulating the strengths, weaknesses, intended optimizations or outcomes, bias mitigation, and appropriate uses of the AI application’s results.”

- Risk Assessment and Management—Agencies should not be overly cautious in regulating. “It is not necessary to mitigate every foreseeable risk . . . .” Instead, agencies should use a practical cost-benefits analysis. “[A] risk-based approach should be used to determine which risks are acceptable and which risks present the possibility of unacceptable harm, or harm that has expected costs greater than expected benefits.”

- Benefits and Costs—Again, agencies are directed to carefully consider the costs of regulation and to avoid hampering innovation. “Such consideration will include the potential benefits and costs of employing AI, when compared to the systems AI has been designed to complement or replace, whether implementing AI will change the type of errors created by the system, as well as comparison to the degree of risk tolerated in other existing ones.”

- Flexibility—Agencies are directed to eschew “[r]igid, design-based regulations that attempt to prescribe the technical specifications of AI applications” that will become impractical and ineffective “given the anticipated pace with which AI will evolve ….” Instead, “agencies should pursue performance-based and flexible approaches that can adapt to rapid changes and updates to AI applications.”

- Fairness and Non-Discrimination—Responding to concerns that AI may incorporate or create harmful bias, the memorandum notes that “[a]gencies should consider in a transparent manner the impacts that AI applications may have on discrimination” because “AI applications have the potential of reducing present-day discrimination caused by human subjectivity.”

- Disclosure and Transparency—Continuing with the theme of fomenting public trust, agencies are directed to consider the role disclosures may play (e.g., disclosures when AI is being used). However, “[w]hat constitutes appropriate disclosure and transparency is context-specific, depending on assessments of potential harms, the magnitude of those harms, the technical state of the art, and the potential benefits of the AI application.”

- Safety and Security—Agencies are to implement controls “to ensure the confidentiality, integrity, and availability of the information processed, stored, and transmitted . . . ,” and consider cybersecurity and potential risks relating to malicious AI deployment.

- Interagency Coordination—Agencies are directed to coordinate with each other to share experiences and to ensure “consistency and predictability of AI-related policies that advance American innovation and growth in AI,” while “appropriately protecting privacy, civil liberties, and American values and allowing for sector- and application-specific approaches when appropriate.”

Consistent with its light-touch approach, the draft memorandum also proposes several non-regulatory approaches, including the use of sector-specific policy guidance or frameworks, pilot programs and experiments, and voluntary consensus standards—and particularly encourages cooperation with the private sector. The memorandum also advocates for agencies to foster AI development through providing access to federal data and models for AI research and development, communicate with the public in a meaningful way about approaches to AI, participate in the development of consensus standards and conformity assessment activities, and cooperate with international regulatory bodies.

Comments on this draft memorandum closed on March 13, 2020, with 81 submissions from individuals, organizations, and companies.[6] An updated, finalized memorandum is forthcoming. As currently drafted, agencies will have 180 days from the issuance of the final memorandum to develop plans consistent with the AI Principles.

II. EU LEGISLATION & POLICY

On February 19, 2020, the EC presented its long-awaited proposal for comprehensive regulation of AI at EU level: the “White Paper on Artificial Intelligence – A European approach to excellence and trust” (“White Paper”).[7] In an op-ed published on the same day, the president of the EC, Ursula von der Leyen, wrote that the EC would not leave digital transformation to chance and that the EU’s new digital strategy could be summed up with the phrase “tech sovereignty.”[8]

As covered in more detail in our recent client alert “EU Proposal on Artificial Intelligence Regulation Released,” the White Paper favors a risk-based approach with sector and application-specific risk assessments and requirements, rather than blanket sectoral requirements or bans. The EC also released a series of accompanying documents, the “European strategy for data” (“Data Strategy”)[9] and a “Report on the safety and liability implications of Artificial Intelligence, the Internet of Things and robotics” (“Report on Safety and Liability”).[10] The documents outline a general strategy, discuss objectives of a potential regulatory framework, and address a number of potential risks and concerns related to AI. The White Paper, which was previewed by Ms. von der Leyen at the beginning of her presidency, is the first step in the legislative process.[11] The draft legislation, which is part of a bigger effort to increase public and private investment in AI to more than €20 billion per year over the next decade,[12] is expected by the end of 2020.

While the first part of the White Paper mostly contains general policy proposals intended to boost AI development, research and investment in the EU, the second outlines the main features of a proposed regulatory framework for AI. In the EC’s view, lack of public trust is one of the biggest obstacles to a broader proliferation of AI in the EU. Thus, as we have noted previously,[13] the EC seeks “first out of the gate” status and aims to increase public trust by attempting to regulate the inherent risks of AI—not unlike the General Data Protection Regulation (“GDPR”). The main risks identified by the EC concern fundamental rights (including data privacy and non-discrimination) as well as safety and liability issues. In addition to proposing certain adjustments to existing legislation that impacts AI, the EC concludes that new regulations specific to AI are necessary to address these risks.

According to the White Paper, the core issue for any future legislation is the scope of its application: the assumption is that any legislation would apply to products and services “relying on AI.” Furthermore, the EC identifies “data” and “algorithms” as AI’s main constituent elements, but also stresses that the definition of AI in a regulatory context must be sufficiently flexible to provide legal certainty, while also allowing for the legislation to adapt to technical progress.

In terms of substantive regulation, the EC favors a context-specific, risk-based approach—instead of a GDPR “one size fits all” approach. An AI product or service will be considered “high-risk” when two cumulative criteria are fulfilled:

- Critical Sector: The AI product or service is employed in a sector where significant risks can be expected to occur. Those sectors should be specifically and exhaustively listed in the legislation; for instance, healthcare, transport, energy and parts of the public sector, such as the police and the legal system.

- Critical Use: The AI product or service is used in such a manner that significant risks are likely to arise. The assessment of the level of risk of a given use can be based on the impact on the affected parties; for instance, where the use of AI produces legal effects, leads to a risk of injury, death or significant damage.

If an AI product or service fulfills both criteria, it will be subject to the mandatory requirements of the new AI legislation. Importantly, the use of AI-based applications for certain purposes will always be considered high-risk when those applications fundamentally impact individual rights. Examples include the use of AI for recruitment processes or for remote biometric identification (such as facial recognition). Moreover, even if an AI product or service is not considered “high-risk,” it will remain subject to existing EU rules, such as the GDPR.[14]

Harking back to the 2019 “Ethics Guidelines for Trustworthy Artificial Intelligence” drafted by the High Level Expert Group on Artificial Intelligence,[15] the EC sets out six key categories which we can expect to see in the upcoming AI legislation: rules governing training data; data and record-keeping requirements; information provision, transparency and accountability; robustness and accuracy; human oversight; and specific requirements for remote biometric identification. The EC also previews a regulatory framework for data and product liability legislation in its accompanying Data Strategy and Report on Safety and Liability.

Companies active in AI should closely follow recent developments in the EU given the proposed geographic reach of the future AI legislation, which is likely to affect all companies doing business in the EU. While the current public health crisis may well delay the timeline, we remain likely to see legislative activity in Europe in the near term. In the meantime, the EC has launched a public consultation period and requested comments on the proposals set out in the White Paper and the Data Strategy, providing an opportunity for companies and other stakeholders to provide feedback and shape the future EU regulatory landscape. Comments may be submitted until May 19, 2020 at https://ec.europa.eu/info/consultations_en.

III. INTELLECTUAL PROPERTY

A. Update on USPTO AI Policy

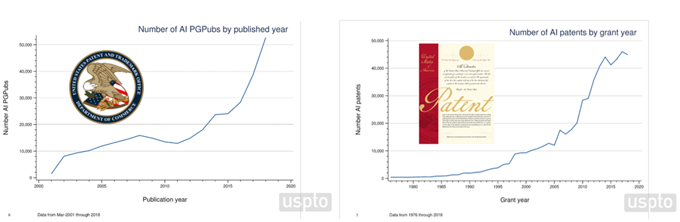

U.S. patent activity involving AI continues to increase dramatically. During the U.S. Patent and Trademark Office’s (“USPTO”) recent Patent Public Advisory Committee (“PPAC”) Quarterly Meeting, Senior Policy Advisor and Acting Chief of Staff Coke Stewart remarked that “over the past several years” the growth in patent applications and patent grants touching on AI has been “radical,”[16] as demonstrated by the following graphics.[17]

As to the basic character or characteristics of AI from the USPTO’s perspective, Acting Chief of Staff Stewart explained that the USPTO today views it as a “tool,” not as an independent entity capable of, for example, innovation. In response to a question about how AI could fulfill patent law’s duty of candor, for example, Ms. Stewart stated, “I think it’s fair to say the way the [USPTO] is seeing [AI] at this point is they see AI as a tool, much like a surgeon and a scalpel or a photographer with a camera, that’s being used to conceive of inventions. We’re not really seeing artificial intelligent machines spontaneously creating.”[18] Notwithstanding, in remarks delivered at “Trust, but Verify: Informational Challenges Surrounding AI-Enabled Clinical Decision Software,” USPTO Deputy Director Laura Peter confirmed that “it has been reported” that a patent application pending before the USPTO names a machine as an inventor. While Deputy Director Peter declined to comment specifically on that application, she highlighted a number of the Office’s “forthcoming AI milestones,” including, among other items addressed below, a possible future “opportunity to further discuss patent applications on inventions for which a machine is claimed to be an inventor.”[19]

One notable forthcoming milestone is the USPTO’s report summarizing the responses to, and potentially providing the USPTO’s conclusions regarding, its two recent Federal Register Notices on AI.[20] As we explained in an earlier client alert,[21] on August 27, 2019, the USPTO published a request for public comments on 12 patent-related questions regarding AI inventions,[22] including the elements of an “AI Invention,” the character of natural persons’ contributions to AI Inventions, and whether revisions were needed to the current law on inventorship, ownership, eligibility, enablement, and/or level of ordinary skill in the art to take into account AI developments. Most notably, the USPTO requested public comment on whether an AI machine could be the inventor of an invention described in a U.S. patent.[23] Shortly thereafter, the USPTO issued a second Federal Register Notice requesting public comment on “the fields of copyright, trademark, database protections, and trade secret law, among others,” which the USPTO believed “may be similarly susceptible to the impacts of developments in AI.”[24]

As of March 18, 2020, the USPTO had received nearly 200 responses to the two requests from individuals, corporations, associations, academia, and others.[25] USPTO’s Acting Chief of Staff Coke Stewart remarked in January 2020 that this “feedback” was “incredible” and expected the Office’s report summarizing the responses to be published in spring 2020. In her remarks at “Trust, but Verify,” Deputy Director Peter indicated this forthcoming report “may include relevant conclusions.”[26]

Another forthcoming milestone for the USPTO’s work on AI include a second report, slated for “late Spring,” from the USPTO Office of the Chief Economist on the patent landscape of artificial intelligence-related inventions.[27] Finally, one of the milestones highlighted by Deputy Director Peter has come to pass: the USPTO’s new internet portal on “all intellectual property-related initiatives and content on [AI] technologies, including Federal Register Notices, research and reports, and news stories” launched on March 3, 2020; it may be found here.

Finally, the USPTO continues to make clear that it views its role in protecting and promoting the private sector’s development of AI in the larger context of the federal government’s policy. As Senior Policy Advisor and Acting Chief of Staff Stewart noted at the quarterly PPAC meeting (and as discussed above), the OMB issued a draft Memorandum on January 7, 2020, providing “guidance to all Federal agencies to inform the development of regulatory and non-regulatory approaches regarding technologies and industrial sectors that are empowered or enabled by” AI, and proposed ways “to reduce barriers to the development and adoption of AI technologies.”[28]

B. Update on the USPTO’s New Eligibility Guidelines

On October 17, 2019, the USPTO issued additional patent subject matter eligibility guidance (“October 2019 PEG”), following its January 2019 guidance to help clarify the process that examiners should undertake when evaluating whether a pending claim is directed to an abstract idea under the Supreme Court’s two-step Alice test and thus not eligible for patent protection under 35 U.S.C. § 101 (the January “2019 PEG” was addressed in a previous Gibson Dunn client alert[29] ). [30] The October 2019 Guidance addresses five major themes from the comments to the 2019 PEG, including:

- evaluating whether a claim recites a judicial exception;

- the groupings of abstract ideas enumerated in the 2019 PEG;

- evaluating whether a judicial exception is integrated into a practical application;

- the prima facie case and the role of evidence with respect to eligibility rejections; and

- the application of the 2019 PEG in the patent examining corps.[31]

The USPTO also issued three appendices with the updated guidance, including Appendix 1, which contained new examples “illustrative” of major themes from the comments.[32]

The following are a few notable items from the October 2019 PEG:

The meaning of “recites” in Step 2A, Prong One. Step 2A, Prong One asks whether the claim “recites an abstract idea, law of nature, or natural phenomenon.”[33] A claim “recites a judicial exception when the judicial exception is ‘set forth’ or ‘described’ in the claim. While the terms ‘set forth’ and ‘describe’ are thus both equated with ‘recite,’ their different language is intended to indicate that there are two ways in which an exception can be recited in a claim.”[34] “Set forth” is used with regard to claims where, for example, the “identifiable” mathematical expression is “clearly stated,” as in Diamond v. Diehr, whereas “describes” is used where an identifiable concept (like that of intermediated settlement, as recited in the claim at issue in Alice v. CLS Bank) is not called out by name.[35]

Multiple abstract ideas in a claim. Examiners are instructed not to “parse” claims that “recite multiple abstract ideas, which may fall in the same or different groupings, or multiple laws of nature” in Step 2A Prong One.[36] Instead, the examiner “should consider the limitations together” to be an abstract idea for Step 2A Prong Two (which asks whether the claim recites “additional elements that integrate the judicial exception into a practical application”[37] ) and Step 2B (which asks whether the claim recites “additional elements that amount to significantly more than the judicial exception”[38] ), “rather than a plurality of separate abstract ideas to be analyzed individually.”[39]

Identifying abstract ideas. Further guidance on identifying abstract ideas enumerated in the 2019 PEG and their relationship to judicial decisions, including with respect to:[40]

Mathematical concepts: Commenters suggested distinguishing between the types of math recited in the claims when making an eligibility determination. After consideration, the USPTO declined to implement this suggestion.[41]

Mathematical calculations: “[N]o particular word or set of words,” like “calculating,” are required to indicate that a claim recites a mathematical calculation.[42]

Mental processes: “Claims do not recite a mental process when they do not contain limitations that can practically be performed in the human mind, for instance when the human mind is not equipped to perform the claim limitations.”[43] Commenters suggested that “an examiner should determine that a claim, when given its broadest reasonable interpretation, recites a mental process only when the claim is performed entirely in the human mind.”[44] After consideration, the USPTO did not adopt this suggestion, as it was not consistent with case law holding that “[c]laims can recite a mental process even if they are being claimed as being performed on a computer[]”[45] or with a physical aid, like pencil and paper.[46]

Examination procedure. Additional guidance was provided regarding the examination procedure for tentative abstract ideas.[47]

Step 2A Inquiry. A “new mini-flowchart” can be found on page 11 of the October 2019 PEG, which “depict[s] the two-prong analysis that is now performed in order to answer the Step 2A inquiry.”[48]

Improving the Functioning of Technology. The October 2019 PEG further explains the process for determining whether the claimed invention improves the functioning of a computer or other technology.[49] As noted in previous client alerts, the 2019 PEG clarified that the “improvements” analysis in Step 2A is not to reference what is well-understood, routine, conventional activity.[50] Rather, well-known, routine, conventional activity will only be considered if the analysis proceeds to Step 2B (whether the claims recite additional elements that amount to “significantly more” than the judicial exception).[51]

For those on the patent application frontlines, Appendix 1 to the October 2019 PEG will be of particular use to practitioners faced with crafting claims to cover AI-related inventions. According to the USPTO, “the examples are intended to illustrate the proper application of the eligibility analysis to a variety of claims in multiple technologies.”[52] Example 46, for example, pertains to a livestock management system that automatically detects and tracks the behavior of livestock animals using information provided by sensors and smart labels.[53] The information is stored in a herd database, which can contain a plurality of possible behavior patterns that are either normal or indicative of disease, stress, or other issues of interest to the farmer.[54] Based on behavioral triggers, the system automatically controls farm equipment, like sorting gates and automatic feed dispensers.[55] The example provides four exemplary claims, and explains why each is or is not eligible for patenting.[56] Notably, eligible claims tied the elements relating to machine learning (i.e., receiving the electronic data from the various sensors, analyzing it, comparing it to a database that is automatically updated to identify behavioral patterns associated with illness or stress) to physical, practical applications, like a feed dispenser capable of providing therapeutically effective additives to the animals’ feed or a sorting gate capable of automatically segregating the ill animal from the rest of the herd.[57]

For companies using or developing AI-related inventions, the rules on patenting are likely to be in flux in the near term as the USPTO adapts to the changing nature of technology and innovation. We will continue to closely monitor developments, and stand ready to advise companies seeking to navigate a path to maximizing the quality and value of their patent protection.

C. International Updates on IP Law & AI

Just as the USPTO has continued to address the relationship to and impact of artificial intelligence on U.S. intellectual property law, so has the international legal community. For example, the World Intellectual Property Organization (“WIPO”) published its Draft Issues Paper on Intellectual Property Policy and Artificial Intelligence[58] on December 19, 2019. Three intergovernmental organizations (including the European Union), 46 non-governmental organizations (including the American Intellectual Property Law Association (“AIPLA”), Intellectual Property Owners Association (“IPO”), International Association for the Protection of Intellectual Property (“AIPPI”), and a variety of international consortiums of writers and creators), 36 member states, 60 corporations (including Alibaba, BlackBerry, Ericsson, Getty Images, Intel, Merck, and Tencent Holdings),[59] and 120 individuals provided comments on the Draft Issues Paper.[60] WIPO had intended to publish a revised issues paper by the end of April 2020, with a second session of the WIPO Conversation on IP and AI to take place May 11-12, 2020.[61] Due to the impact of the worldwide pandemic, the second session has been postponed indefinitely, and, as of this writing, WIPO has not indicated whether it would meet its self-imposed April deadline for publishing its revised issues paper.

Inventorship and ownership of AI inventions were a principal focus of the draft issues paper—understandable, given the European Patent Office’s and U.K. Intellectual Property Office’s recent highly publicized rejection of patent applications in which an AI system (DABUS) was named as an inventor.[62]

IV. AUTONOMOUS VEHICLES

A. House Panel Discusses Regulation of Autonomous Vehicles (“AVs”)

In 2019, federal lawmakers demonstrated renewed interest in a comprehensive AV bill aimed at speeding up the adoption of autonomous vehicles and deploying a regulatory framework. In July 2019, the House Energy and Commerce Committee and Senate Commerce Committee sought stakeholder input from the self-driving car industry in order to draft a bipartisan and bicameral AV bill, prompting stakeholders to provide feedback to the committees on a variety of issues involving autonomous vehicles, including cybersecurity, privacy, disability access, and testing expansion. On February 11, 2020, the House Committee on Energy and Commerce, Subcommittee on Consumer Protection and Commerce, held a hearing titled “Autonomous Vehicles: Promises and Challenges of Evolving Automotive Technologies.” In a memorandum issued in advance of the hearing, the Committee states that in 2018, “36,560 people were killed in motor vehicle traffic crashes on U.S. roadways” and noting that “[n]inety-four percent of crashes are thought to be caused by driver error.”[63] Three consumer issues were addressed at the hearing: driver and passenger safety; autonomous vehicle testing; and cybersecurity concerns.

As we have addressed in previous legal updates,[64] this is not Congress’s first attempt to regulate AVs. The U.S. House of Representatives passed the Safely Ensuring Lives Future Deployment and Research In Vehicle Evolution (SELF DRIVE) Act (H.R. 3388)[65] by voice vote in September 2017, but its companion bill (the American Vision for Safer Transportation through Advancement of Revolutionary Technologies (AV START) Act (S. 1885))[66] stalled in the Senate as a result of holds from Democratic senators who expressed concerns that the proposed legislation remains immature and underdeveloped in that it “indefinitely” preempts state and local safety regulations even in the absence of federal standards.[67] Federal regulation of autonomous vehicles has so far faltered in the new Congress, as the SELF DRIVE Act and the AV START Act have not been reintroduced since expiring with the close of the 115th Congress.[68]

Observers have commented that “the main sticking point in negotiations continues to be the potential federal preemption of state and local regulations.”[69] Witnesses at the February 2020 hearing voiced concerns over adopting federal legislation, and thus preempting state regulation, without firm safety standards in place. State regulatory activity has continued to accelerate, adding to the already complex mix of regulations that apply to companies manufacturing and testing AVs. As outlined in our 2019 Artificial Intelligence and Automated Systems Annual Legal Review, state regulations vary significantly.[70]

Manufacturers and lawmakers at the hearing expressed concern that the federal government’s failure to act has left the U.S. trailing behind competitors.[71] Consumer advocacy groups, on the other hand, argued that the U.S. is “behind in establishing comprehensive safeguards.”[72] From the law enforcement angle, the San Francisco Municipal Transportation Agency, under whose oversight many companies are working on self-driving car tests, advocated for installing a “black box” in vehicles to capture crash data, and creating a national database of self-driving car incidents.[73]

According to press reports, shortly after the hearing, the House Panel released a seven-section draft bill to advocacy groups for feedback, in addition to six other sections previously circulated.[74] These seven sections are: cybersecurity; consumer education; dual use safety; authorization of appropriations; executive staffing; crash data; and exclusion of trucks from the bill’s scope. Extensive requirements aiming at preventing cyber attacks were introduced in the draft bill, including manufacturers’ affirmative duties to appoint officers with cybersecurity responsibilities, voluntarily share lessons learned across industry, and provide employee cybersecurity trainings and supervisions. The draft bill also imposes duties on manufacturers to include car accident and crash data collection systems in their AVs, along with customer education.

B. DOT Acts on Updated Guidance for AV Industry

In January 2020, the DoT published updated guidance for the regulation of the autonomous vehicle industry, “Ensuring American Leadership in Automated Vehicle Technologies” or “AV 4.0.”[75] The guidance builds on the AV 3.0 guidance released in October 2018, which introduced guiding principles for AV innovation for all surface transportation modes, and described the DoT’s strategy to address existing barriers to potential safety benefits and progress.[76] AV 4.0 includes 10 principles to protect consumers, promote markets and ensure a standardized federal approach to AVs. In line with previous guidance, the report promises to address legitimate public concerns about safety, security, and privacy without hampering innovation, relying strongly on the industry self-regulating. However, the report also reiterates traditional disclosure and compliance standards that companies leveraging emerging technology should continue to follow.

The National Highway Traffic Safety Administration (“NHTSA”) announced in February 2020 its approval of the first AV exemption—from three federal motor vehicle standards—to Nuro, a California-based company that plans to deliver packages with a robotic vehicle smaller than a typical car.[77] The exemption allows the company to deploy and produce no more than 5,000 of its “low-speed, occupant-less electric delivery vehicles” in a two-year period, which would be operated for local delivery services for restaurants and grocery stores.

C. DOT Issues First-Ever Proposal to Modernize Occupant Protection Safety Standards for AVs

Shortly after announcing the AV 4.0, NHTSA in March 2020 issued its first-ever Notice of Proposed Rulemaking (“Notice”) “to improve safety and update rules that no longer make sense such as requiring manual driving controls on autonomous vehicles.”[78] The Notice aims to “help streamline manufacturers’ certification processes, reduce certification costs and minimize the need for future NHTSA interpretation or exemption requests.” For example, the proposed regulation would apply front passenger seat protection standards to the traditional driver’s seat of an AV, rather than safety requirements that are specific to the driver’s seat. Nothing in the Notice would make changes to existing occupant protection requirements for traditional vehicles with manual controls. The public has until May 29 to comment on the Notice.[79]

Given the fast pace of developments and tangle of applicable rules, it is essential that companies operating in this space stay abreast of legal developments in states as well as cities in which they are developing or testing AVs, while understanding that any new federal regulations may ultimately preempt states’ authorities to determine, for example, safety policies or how they handle their passengers’ data.

V. EMPLOYMENT LAW/HIRING

A. Illinois Law Increases Transparency on AI Hiring Practices

On January 1, 2020, Illinois’ Artificial Intelligence Video Interview Act went into effect.[80] Under the Act, an employer using videotaped interviews when filling a position in Illinois may use AI to analyze the interview footage only if the employer:

- Gives notice (need not be written) to the applicant that the videotaped interview may be analyzed using AI for purposes of evaluating the applicant’s fitness for the position.

- Provides the applicant with an explanation of how the AI works and what characteristics it uses to evaluate applicants.

- Obtains consent from the applicant to use AI for an analysis of the video interview.

- Keeps video recordings confidential by sharing the videos only with persons whose expertise or technology is needed to evaluate the applicant, and destroying both the video and all copies within 30 days after an applicant requests such destruction.

Employers in Illinois using AI-powered video interviewing will need to carefully consider how they are addressing the risk of AI-driven bias in their current operations, and whether hiring practices fall under the scope of the new law, which does not define “artificial intelligence,” what level of “explanation” is required, or whether it applies to employers seeking to fill a position in Illinois regardless of where the interview takes place.

In February 2020, a lawsuit was filed against Clarifai Inc., an AI company specializing in computer vision and visual recognition, in Chicago.[81] One of Clarifai’s tools is a “demographics” model, which analyzes images and returns information on age, gender, and multicultural appearance for each detected face based on facial characteristics. The complaint alleges that Clarifai captured the profiles of tens of thousands of users on the dating site, OkCupid, and scanned the facial geometry of each individual, in violation of the Illinois’ Biometric Information Privacy Act (“BIPA”), to create a “face database” of OkCupid users and train its own facial recognition tools. Under the Illinois BIPA, companies can face up to $5,000 for each willful violation. While the Illinois Act currently remains the only such law in the U.S., companies using automated technology in recruitment should expect that the increased use of AI technology in recruitment is likely to lead to further regulation and legislation.

Meanwhile, the Equal Employment Opportunity Commission is investigating several cases involving claims that algorithms have been unlawfully excluding groups of workers during the recruitment process.[82]

B. New York City Aims to Regulate the Use of AI in Hiring

On February 27, 2020, the New York City Council introduced a bill to regulate the sale of “automated employment decision tool[s]” that filter candidates “for hire or for any term, condition or privilege of employment in a way that establishes a preferred candidate or candidates.”[83] If passed, the bill would go into effect on January 1, 2022.

The bill would require that technology companies conduct annual “bias audits” beforeselling AI-powered hiring tools in New York City. In addition, companies using such tools would have to notify each job candidate “within 30 days” of screening about the specific tool used to evaluate them and “the qualifications or characteristics that such tool was used to assess in the candidate.”[84] Moreover, those selling AI-powered decision making software would have to provide the purchaser of the software with the results of the annual bias audit.

Employers contemplating the use and implementation of AI-powered decision-making tools for hiring should exercise caution and ensure that these tools have been audited for discriminatory biases.

_______________________

[1] On February 11, 2020, the White House also announced 2021 budget plans to increase budgets related to AI to “pu[t] America in position to maintain its global leadership in science and technology[.]” See https://www.whitehouse.gov/briefings-statements/president-trumps-fy-2021-budget-commits-double-investments-key-industries-future/.

[2] Donald J. Trump, Executive Order on Maintaining American Leadership in Artificial Intelligence, The White House (Feb. 11, 2019), Exec. Order No. 13859, 3 C.F.R. 3967, available at https://www.whitehouse.gov/presidential-actions/executive-order-maintaining-american-leadership-artificial-intelligence/.

[3] White House AI Order Emphasizes Use for Citizen Experience, Meritalk (Apr. 18, 2019), available at https://www.meritalk.com/articles/white-house-ai-order-emphasizes-use-for-citizen-experience/.

[4] Director of the Office of Management and Budget, Guidance for Regulation of Artificial Intelligence Applications (Jan. 7, 2020), available at https://www.whitehouse.gov/wp-content/uploads/2020/01/Draft-OMB-Memo-on-Regulation-of-AI-1-7-19.pdf.

[5] For an in-depth analysis, please see our update, President Trump Issues Executive Order on “Maintaining American Leadership in Artificial Intelligence.”

[6] Regulations.gov, OMB Guidance for Regulation of Artificial Intelligence Applications, available at https://www.regulations.gov/docketBrowser?rpp=50&so=DESC&sb=postedDate&po=0&dct=PS&D=OMB-2020-0003.

[7] EC, White Paper on Artificial Intelligence – A European approach to excellence and trust, COM(2020) 65 (Feb. 19, 2020), available at https://ec.europa.eu/info/sites/info/files/commission-white-paper-artificial-intelligence-feb2020_en.pdf.

[8] EC, Shaping Europe’s digital future: op-ed by Ursula von der Leyen, President of the European Commission, AC/20/260, available at https://ec.europa.eu/commission/presscorner/detail/en/AC_20_260.

[9] EC, A European strategy for data, COM(2020) 66 (Feb. 19, 2020), available at https://ec.europa.eu/info/files/communication-european-strategy-data_en.

[10] EC, Report on the safety and liability implications of Artificial Intelligence, the Internet of Things and robotics, COM(2020) 64 (Feb. 19, 2020), available at https://ec.europa.eu/info/files/commission-report-safety-and-liability-implications-ai-internet-things-and-robotics_en.

[11] Ursula von der Leyen, A Union that strives for more: My agenda for Europe, available at https://ec.europa.eu/commission/sites/beta-political/files/political-guidelines-next-commission_en.pdf.

[12] EC, Artificial Intelligence for Europe, COM(2018) 237 (Apr. 25, 2018), available at https://ec.europa.eu/digital-single-market/en/news/communication-artificial-intelligence-europe.

[13] H. Mark Lyon, Gearing Up For The EU’s Next Regulatory Push: AI, LA & SF Daily Journal (Oct. 11, 2019), available at https://www.gibsondunn.com/wp-content/uploads/2019/10/Lyon-Gearing-up-for-the-EUs-next-regulatory-push-AI-Daily-Journal-10-11-2019.pdf.

[14] The exact implications and requirements of the GDPR on AI based products and services are still not entirely clear; see further, Ahmed Baladi, Can GDPR hinder AI made in Europe?, Cybersecurity Law Report (July 10, 2019), available at https://www.gibsondunn.com/can-gdpr-hinder-ai-made-in-europe/.

[15] For further detail, see our 2019 Artificial Intelligence and Automated Systems Annual Legal Review.

[16] Coke Stewart, (USPTO Senior Policy Advisor and Acting Chief of Staff), Remarks Delivered at Quarterly Meeting of Patent Public Advisory Committee, Tr. at p. 164 (Feb. 6, 2020), available at https://www.uspto.gov/sites/default/files/documents/PPAC_Transcript_20200206.pdf.

[17] Coke Stewart, USPTO AI Policy Update Presentation at pp. 6-7 (Feb. 6, 2020), available at https://www.uspto.gov/sites/default/files/documents/20200206_PPAC_AI_Policy_Update.pdf.

[18] Stewart, Coke (U.S.P.T.O. Senior Policy Advisor and Acting Chief of Staff), Remarks Delivered at Quarterly Meeting of Patent Public Advisory Committee, Tr. at p. 169 (Feb. 6, 2020), available at https://www.uspto.gov/sites/default/files/documents/PPAC_Transcript_20200206.pdf.

[19] Peter, Laura (Deputy Director of the U.S.P.T.O), Remarks Delivered at Trust, but Verify: Informational Challenges Surrounding AI-Enabled Clinical Decision Software (Jan. 23, 2020), available at https://www.uspto.gov/about-us/news-updates/remarks-deputy-director-peter-trust-verify-informational-challenges.

[20] Peter, Laura (Deputy Director of the U.S.P.T.O), Remarks Delivered at Trust, but Verify: Informational Challenges Surrounding AI-Enabled Clinical Decision Software (Jan. 23, 2020), available at https://www.uspto.gov/about-us/news-updates/remarks-deputy-director-peter-trust-verify-informational-challenges.

[21] Gibson Dunn, USPTO Requests Public Comments on Patenting Artificial Intelligence Inventions (Aug. 28, 2019), https://www.gibsondunn.com/uspto-requests-public-comments-on-patenting-artificial-intelligence-inventions/.

[22] Code Fed. Reg. Vol. 84, No. 166, Aug. 27, 2019, available at https://www.govinfo.gov/content/pkg/FR-2019-08-27/pdf/2019-18443.pdf.

[23] Peter, Laura (Deputy Director of the U.S.P.T.O), Remarks Delivered at Trust, but Verify: Informational Challenges Surrounding AI-Enabled Clinical Decision Software (Jan. 23, 2020), available at https://www.uspto.gov/about-us/news-updates/remarks-deputy-director-peter-trust-verify-informational-challenges.

[24] U.S.P.T.O., Director’s Forum: A Blog from U.S.P.T.O.’s Leadership (Oct. 30, 2019), https://www.uspto.gov/blog/director/entry/uspto_issues_second_federal_register.

[25] U.S.P.T.O., U.S.P.T.O. Posts Responses from Requests for Comments on Artificial Intelligence (Mar. 18, 2020), https://www.uspto.gov/about-us/news-updates/uspto-posts-responses-from-requests-comments-artificial-intelligence.

[26] Peter, Laura (Deputy Director of the U.S.P.T.O), Remarks Delivered at Trust, but Verify: Informational Challenges Surrounding AI-Enabled Clinical Decision Software (Jan. 23, 2020), available at https://www.uspto.gov/about-us/news-updates/remarks-deputy-director-peter-trust-verify-informational-challenges.

[27] Peter, Laura (Deputy Director of the U.S.P.T.O), Remarks Delivered at Trust, but Verify: Informational Challenges Surrounding AI-Enabled Clinical Decision Software (Jan. 23, 2020), available at https://www.uspto.gov/about-us/news-updates/remarks-deputy-director-peter-trust-verify-informational-challenges.

[28] Code Fed. Reg. Vol. 85, No. 1825, available at https://www.federalregister.gov/documents/2020/01/13/2020-00261/request-for-comments-on-a-draft-memorandum-to-the-heads-of-executive-departments-and-agencies.

[29] Gibson Dunn, The Impact of New USPTO Eligibility Guidelines on Artificial Intelligence-related Inventions (Jan. 11, 2019), available at https://www.gibsondunn.com/impact-of-new-uspto-eligibility-guidelines-on-artificial-intelligence-related-inventions/.

[30] U.S.P.T.O., October 2019 Update: Subject Matter Eligibility (Oct. 17, 2019), https://www.uspto.gov/sites/default/files/documents/peg_oct_2019_update.pdf.

[31] Id. at p. 1.

[32] Ibid.

[33] Id. at p. 11, Fig. 2.

[34] Ibid.

[35] Ibid.

[36] Id. at p. 2.

[37] Id. at p. 11, Fig. 2.

[38] Id. at p. 10, Fig. 1.

[39] Id. at p. 2.

[40] Ibid.

[41] Id. at p. 3.

[42] Ibid.

[43] Id. at p. 7.

[44] Id. at p. 8 (emphasis in original).

[45] Ibid.

[46] Id. at p. 9.

[47] Ibid.

[48] Id. at p. 11.

[49] Id. at pp. 12-13.

[50] Id. at p. 12.

[51] Id. at p. 15.

[52] Id. at p. 17.

[53] USPTO, App’x 1 to the October 2019 Update: Subject Matter Eligibility Life Sciences & Data Processing Examples, at 31, available at https://www.uspto.gov/sites/default/files/documents/peg_oct_2019_app1.pdf.

[54] Ibid.

[55] Ibid.

[56] Id. at pp. 32-41.

[57] Ibid.

[58] WIPO Conversation on Intellectual Property and Artificial Intelligence Second Session, Draft Issues Paper on Intellectual Property Policy and Artificial Intelligence (Dec. 13, 2019), available at https://www.wipo.int/edocs/mdocs/mdocs/en/wipo_ip_ai_2_ge_20/wipo_ip_ai_2_ge_20_1.pdf.

[59] See https://www.wipo.int/about-ip/en/artificial_intelligence/submissions-search.jsp?type_id=2416&territory_id=&issue_id=.

[61] WIPO, Artificial Intelligence and Intellectual Property Policy (Apr. 8, 2020), https://www.wipo.int/about-ip/en/artificial_intelligence/policy.html; WIPO, WIPO Conversation on Intellectual Property and Artificial Intelligence: Second Session, (Apr. 8, 2020), https://www.wipo.int/meetings/en/details.jsp?meeting_id=55309.

[62] European Patent Office, EPO refuses DABUS patent applications designating a machine inventor (Dec. 20, 2019), available at https://www.epo.org/news-issues/news/2019/20191220.html.

[63] House Committee on Energy and Commerce, Re: Hearing on “Autonomous Vehicles: Promises and Challenges of Evolving Automotive Technologies” (Feb. 7, 2020), available at https://docs.house.gov/meetings/IF/IF17/20200211/110513/HHRG-116-IF17-20200211-SD002.pdf.

[64] For more information, please see our legal update Accelerating Progress Toward a Long-Awaited Federal Regulatory Framework for Autonomous Vehicles in the United States and, most recently, our 2019 Artificial Intelligence and Automated Systems Annual Legal Review.

[65] H.R. 3388, 115th Cong. (2017).

[66] U.S. Senate Committee on Commerce, Science and Transportation, Press Release, Oct. 24, 2017, available at https://www.commerce.senate.gov/public/index.cfm/pressreleases?ID=BA5E2D29-2BF3-4FC7-A79D-58B9E186412C.

[67] Letter from Democratic Senators to U.S. Senate Committee on Commerce, Science and Transportation (Mar. 14, 2018), available at https://morningconsult.com/wp-content/uploads/2018/11/2018.03.14-AV-START-Act-letter.pdf.

[68] U.S. Senate Committee on Commerce, Science and Transportation, Press Release, Oct. 24, 2017, available at https://www.commerce.senate.gov/public/index.cfm/pressreleases?ID=BA5E2D29-2BF3-4FC7-A79D-58B9E186412C.

[69] Zach George, Congress nears agreement on comprehensive framework for autonomous vehicles (Feb. 18, 2020), available at https://www.naco.org/blog/congress-nears-agreement-comprehensive-framework-autonomous-vehicles.

[70] See National Conference of State Legislators, Self-Driving Vehicles Enacted Legislation, available at https://www.ncsl.org/research/transportation/autonomous-vehicles-self-driving-vehicles-enacted-legislation.aspx.

[71] The Hill, House lawmakers close to draft bill on self-driving cars (Feb. 11, 2020), available at https://thehill.com/policy/technology/482628-house-lawmakers-close-to-draft-bill-on-self-driving-cars.

[72] Automotive News, Groups call on U.S. lawmakers to develop ‘meaningful legislation’ for AVs (Feb. 11, 2020), available at https://www.autonews.com/mobility-report/groups-call-us-lawmakers-develop-meaningful-legislation-avs.

[73] The Verge, We still can’t agree how to regulate self-driving cars (Feb. 11, 2020), available at https://www.theverge.com/2020/2/11/21133389/house-energy-commerce-self-driving-car-hearing-bill-2020.

[74] Bloomberg Government, Self-Driving Car Bill Drafts Silent on Maker Accountability (1), available at https://about.bgov.com/news/self-driving-car-bill-drafts-silent-on-maker-accountability-1/. Previously released draft bill includes sections on federal, state and local roles, exemptions, rulemakings, FAST Act testing expansion, advisory committees and definitions, https://www.mema.org/draft-bipartisan-driverless-car-bill-offered-house-panel.

[75] U.S. Dep’t of Transp., Ensuring American Leadership in Automated Vehicle Technologies: Automated Vehicles 4.0 (Jan. 2020), available at https://www.transportation.gov/sites/dot.gov/files/docs/policy-initiatives/automated-vehicles/360956/ensuringamericanleadershipav4.pdf.

[76] U.S. Dep’t of Transp., Preparing for the Future of Transportation: Automated Vehicles 3.0 (Sept. 2017), available at https://www.transportation.gov/sites/dot.gov/files/docs/policy-initiatives/automated-vehicles/320711/preparing-future-transportation-automated-vehicle-30.pdf.

[77] Congressional Research Service, Issues in Autonomous Vehicle Testing and Deployment (Feb. 11, 2020), available at https://fas.org/sgp/crs/misc/R45985.pdf; U.S. Dep’s of Transp., NHTSA Grants Nuro Exemption Petition for Low-Speed Driverless Vehicle, available at https://www.nhtsa.gov/press-releases/nuro-exemption-low-speed-driverless-vehicle.

[78] U.S. Dep’t of Transp., NHTSA Issues First-Ever Proposal to Modernize Occupant Protection Safety Standards for Vehicles Without Manual Controls, available at https://www.nhtsa.gov/press-releases/adapt-safety-requirements-ads-vehicles-without-manual-controls.

[79] 49 CFR 571 2020, available at https://www.federalregister.gov/documents/2020/03/30/2020-05886/occupant-protection-for-automated-driving-systems.

[80] H.B. 2557, 2019-2020 Reg. Sess. (Ill. 2019) (101st Gen. Assembly), available at http://www.ilga.gov/legislation/101/HB/PDF/10100HB2557lv.pdf.

[81] Stein v. Clarifai, Inc., No. 2020-CH-01810 (Ill. Cir. Ct. Feb. 13, 2020).

[82] Chris Opfer, AI Hiring Could Mean Robot Discrimination Will Head to Courts, Bloomberg (Nov. 12, 2019), https://news.bloomberglaw.com/daily-labor-report/ai-hiring-could-mean-robot-discrimination-will-head-to-courts.

[83] Sale of Automated Employment Decision Tools,Int. No. 1894 (Feb. 27, 2020).

[84] Id.

The following Gibson Dunn lawyers prepared this client update: H. Mark Lyon, Frances Waldmann, Brooke Myers Wallace, Tony Bedel, Emily Lamm and Derik Rao.

Gibson Dunn’s lawyers are available to assist in addressing any questions you may have regarding these developments. Please contact the Gibson Dunn lawyer with whom you usually work, any member of the firm’s Artificial Intelligence and Automated Systems Group, or the following authors:

H. Mark Lyon – Palo Alto (+1 650-849-5307, [email protected])

Frances A. Waldmann – Los Angeles (+1 213-229-7914,[email protected])

Please also feel free to contact any of the following practice group members:

Artificial Intelligence and Automated Systems Group:

H. Mark Lyon – Chair, Palo Alto (+1 650-849-5307, [email protected])

J. Alan Bannister – New York (+1 212-351-2310, [email protected])

Ari Lanin – Los Angeles (+1 310-552-8581, [email protected])

Robson Lee – Singapore (+65 6507 3684, [email protected])

Carrie M. LeRoy – Palo Alto (+1 650-849-5337, [email protected])

Alexander H. Southwell – New York (+1 212-351-3981, [email protected])

Eric D. Vandevelde – Los Angeles (+1 213-229-7186, [email protected])

Michael Walther – Munich (+49 89 189 33 180, [email protected])

© 2020 Gibson, Dunn & Crutcher LLP

Attorney Advertising: The enclosed materials have been prepared for general informational purposes only and are not intended as legal advice.